このページは、まだ日本語ではご利用いただけません。翻訳中です。

旧バージョンのドキュメントを参照しています。 最新のドキュメントはこちらをご参照ください。

Observability

This page will describe how to configure different observability tools to work with Kong Mesh.

Demo setup

kumactl ships with a builtin observability stack which consists of:

- prometheus for metrics

- jaeger for ingesting and storing traces

- loki for ingesting and storing logs

- grafana for querying and displaying metrics, traces and logs

First, remember to configure Kong Mesh appropriately for the tools in the observability stack:

-

MeshMetricfor telemetry -

MeshTracefor tracing -

MeshAccessLogfor logging

On Kubernetes, the stack can be installed with:

kumactl install observability | kubectl apply -f -

This will create a namespace mesh-observability with prometheus, jaeger, loki and grafana installed and setup to work with Kong Mesh.

This setup is meant to be used for trying out Kong Mesh. It is in no way fit for use in production. For production setups we recommend referring to each project’s website or to use a hosted solution like Grafana cloud or Datadog.

Control plane observability

The control plane supports metrics and traces for observability.

Metrics

Control plane metrics are exposed on port :5680 and available under the standard path /metrics.

Traces

Kong Mesh can be configured to export OpenTelemetry traces. It exports traces for:

- API server

- KDS on global

- Note only basic information about the connections to zones are traced, nothing resource specific

- Inter CP server

To enable tracing, set the

KUMA_TRACING_OPENTELEMETRY_ENABLED/tracing.openTelemetry.enabled control plane

config variable to "true" and configure OpenTelemetry using the

standard OTEL_EXPORTER_OTLP_* environment variables.

Configuring Prometheus

2.6.0 version of Kong Mesh has introduced a bug in MADS server. This bug can cause delays in delivering monitoring assignment to Prometheus, if you changed default prometheus configuration for

kuma_sd_configs.fetch_timeout. Which result in Prometheus not collecting metrics from new dataplane proxies for that period of time. In order to fix this issue, you need configurekuma_sd_configsas follows:yaml kuma_sd_configs: - fetch_timeout: 0sThis will disable long polling on Prometheus service discovery.

The Kong Mesh community has contributed a builtin service discovery to Prometheus, it is documented in the Prometheus docs. This service discovery will connect to the control plane and retrieve all data planes with enabled metrics which Prometheus will scrape and retrieve metrics according to your MeshMetric policies.

There are 2 ways you can run prometheus:

- Inside the mesh (default for

kumactl install observability).Outside the mesh. In this case you’ll need to specify

tls.mode: disabledin the MeshMetric configuration. This is less secure but ensures Prometheus is as available as possible. It’s also easier to add to an existing setup with services in and outside the mesh.- Outside the mesh with TLS enabled. In this case you’ll need to provide certificates for each dataplane and specify configuration in the MeshMetric configuration. This is more secure than second option but requires more configuration.

In production, we recommend the second option as it provides better visibility when things go wrong, and it’s usually acceptable for metrics to be less secure.

Using an already existing prometheus setup

In Prometheus version 2.29 and later, you can add Kong Mesh metrics to your prometheus.yml:

scrape_configs:

- job_name: 'kuma-dataplanes'

scrape_interval: "5s"

relabel_configs:

- source_labels:

- __meta_kuma_mesh

regex: "(.*)"

target_label: mesh

- source_labels:

- __meta_kuma_dataplane

regex: "(.*)"

target_label: dataplane

- action: labelmap

regex: __meta_kuma_label_(.+)

kuma_sd_configs:

- server: "http://kong-mesh-control-plane.kong-mesh-system.svc:5676" # replace with the url of your control plane

For more information, see the Prometheus documentation.

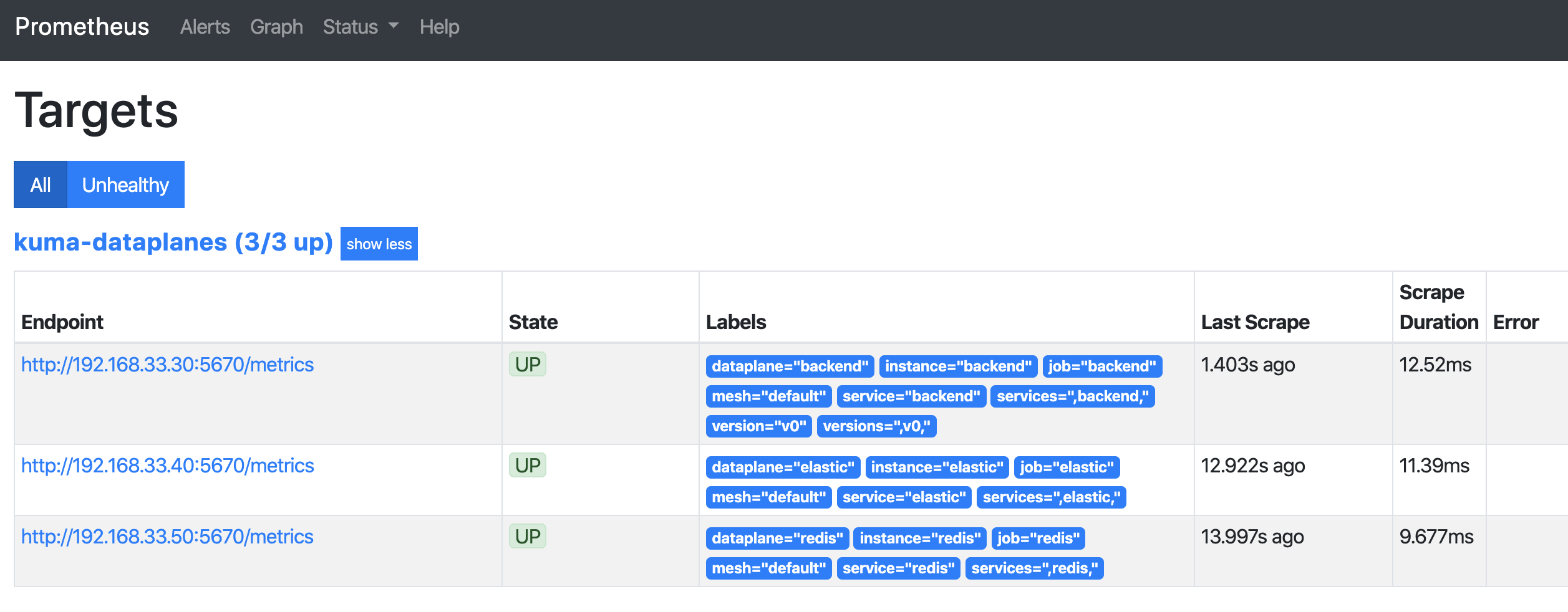

If you have MeshMetric enabled for your mesh, check the Targets page in the Prometheus dashboard. You should see a list of data plane proxies from your mesh. For example:

Configuring Grafana

Visualizing traces

To visualise your traces you need to have Grafana up and running.

kumactl install observabilitysets this up out of the box.

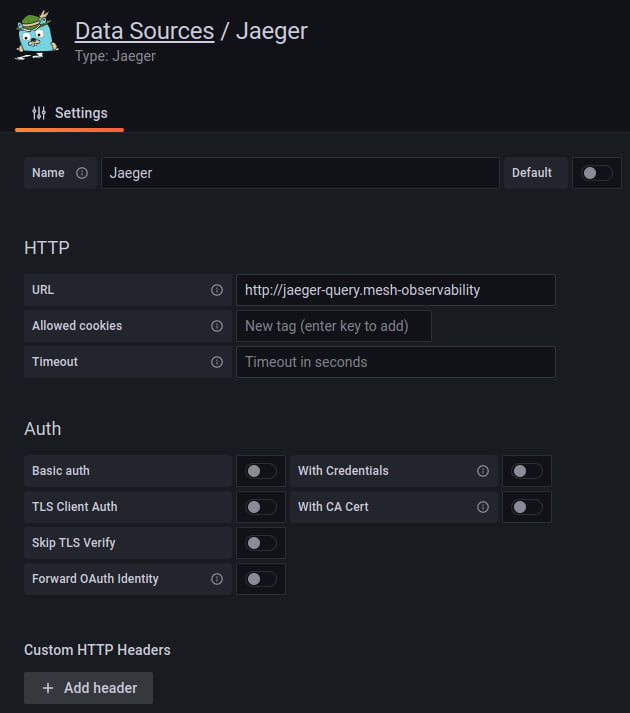

With Grafana installed you can configure a new datasource with url:http://jaeger-query.mesh-observability/ (or whatever url jaeger can be queried at).

Grafana will then be able to retrieve the traces from Jaeger.

You can then add a MeshTrace policy to your mesh to start emitting traces. At this point you can visualize your traces in Grafana by choosing the jaeger datasource in the explore section.

Visualizing logs

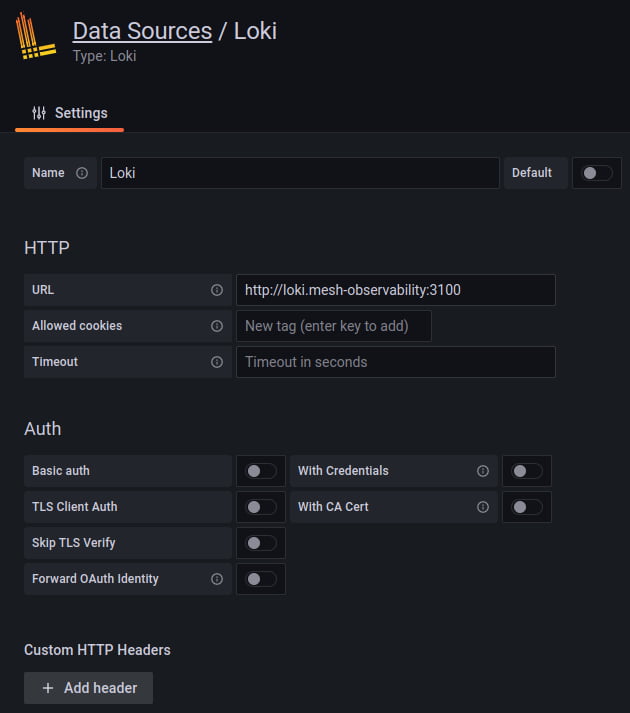

To visualise your containers’ logs and your access logs you need to have a Grafana up and running.

kumactl install observabilitysets this up out of the box.

You can then add a MeshAccessLog policy to your mesh to start emitting access logs. Loki will pick up logs that are sent to stdout. To send logs to stdout you can configure the logging backend as shown below:

apiVersion: kuma.io/v1alpha1

kind: Mesh

metadata:

name: default

spec:

logging:

defaultBackend: stdout

backends:

- name: stdout

type: file

conf:

path: /dev/stdout

At this point you can visualize your containers’ logs and your access logs in Grafana by choosing the loki datasource in the explore section.

For example, running: {container="kuma-sidecar"} |= "GET" will show all GET requests on your cluster.

To learn more about the search syntax check the Loki docs.

Nice to have

Having your Logs and Traces in the same visualisation tool can come really handy. By adding the

traceIdin your app logs you can visualize your logs and the related Jaeger traces. To learn more about it go read this article.

Grafana extensions

The Kong Mesh community has built a datasource and a set of dashboards to provide great interactions between Kong Mesh and Grafana.

Datasource and service map

The Grafana Datasource is a datasource specifically built to relate information from the control plane with Prometheus metrics.

Current features include:

- Display the graph of your services with the MeshGraph using Grafana nodeGraph panel.

- List meshes.

- List zones.

- List services.

To use the plugin you’ll need to add the binary to your Grafana instance by following the installation instructions.

To make things simpler the datasource is installed and configured when using kumactl install observability.

Dashboards

Kong Mesh ships with default dashboards that are available to import from the Grafana Labs repository.

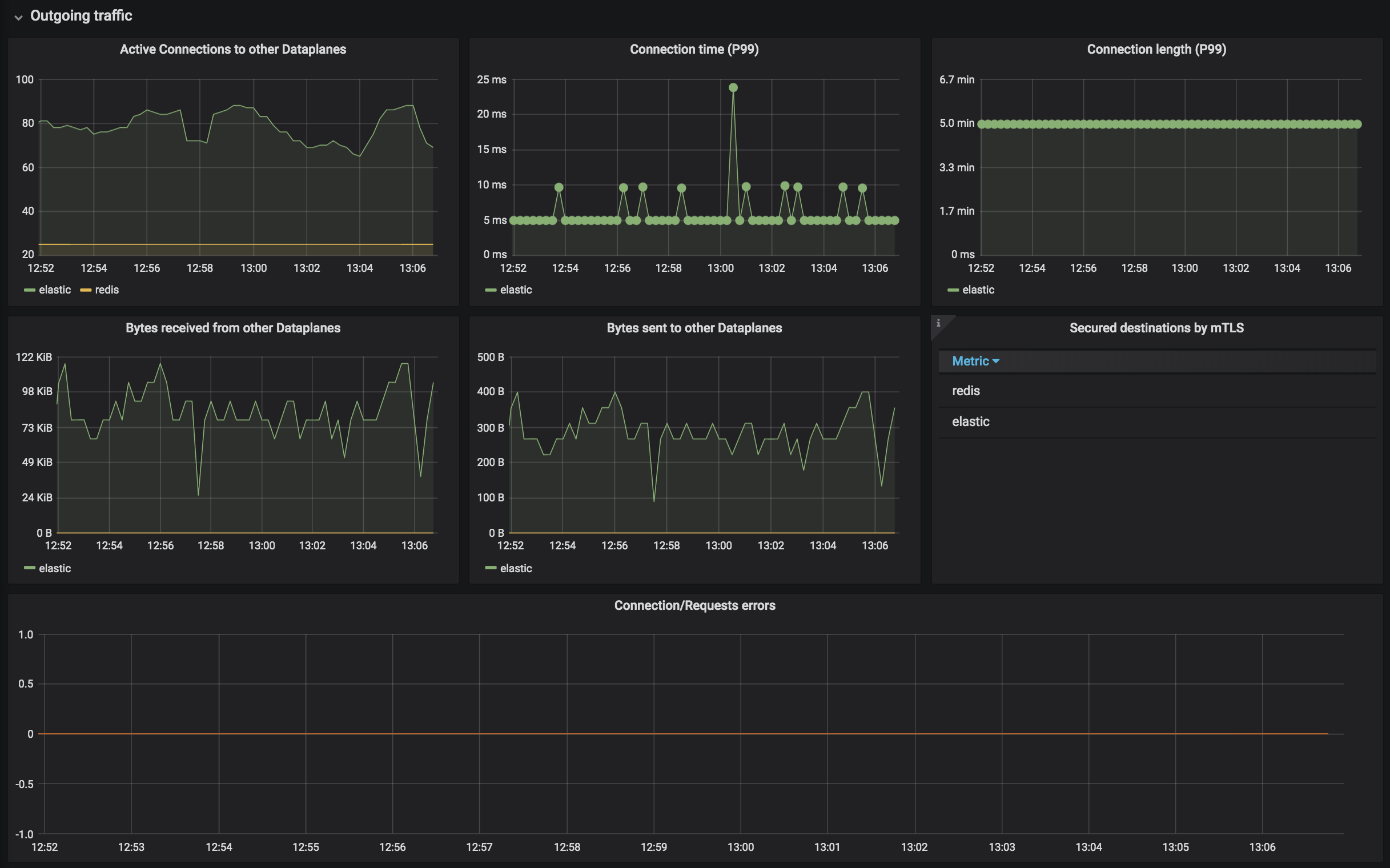

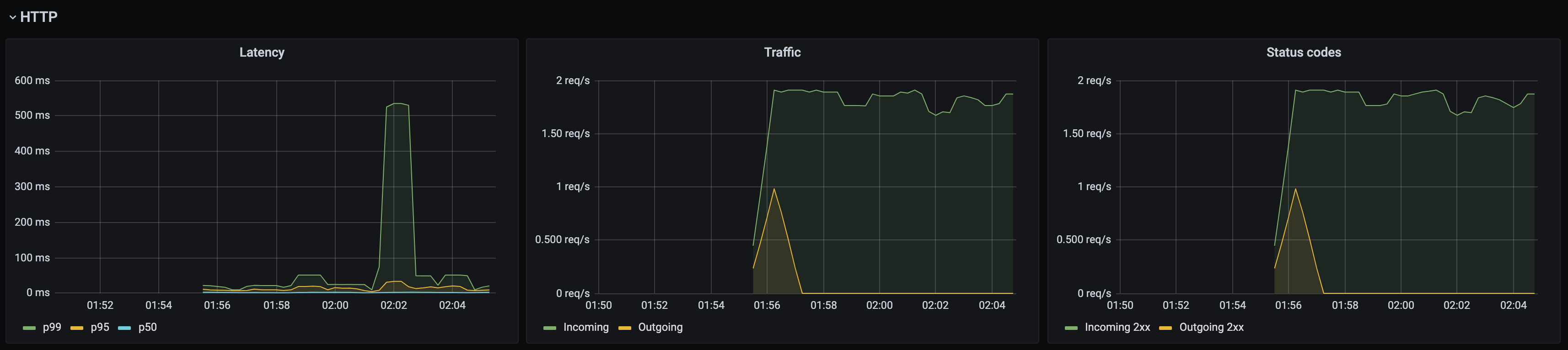

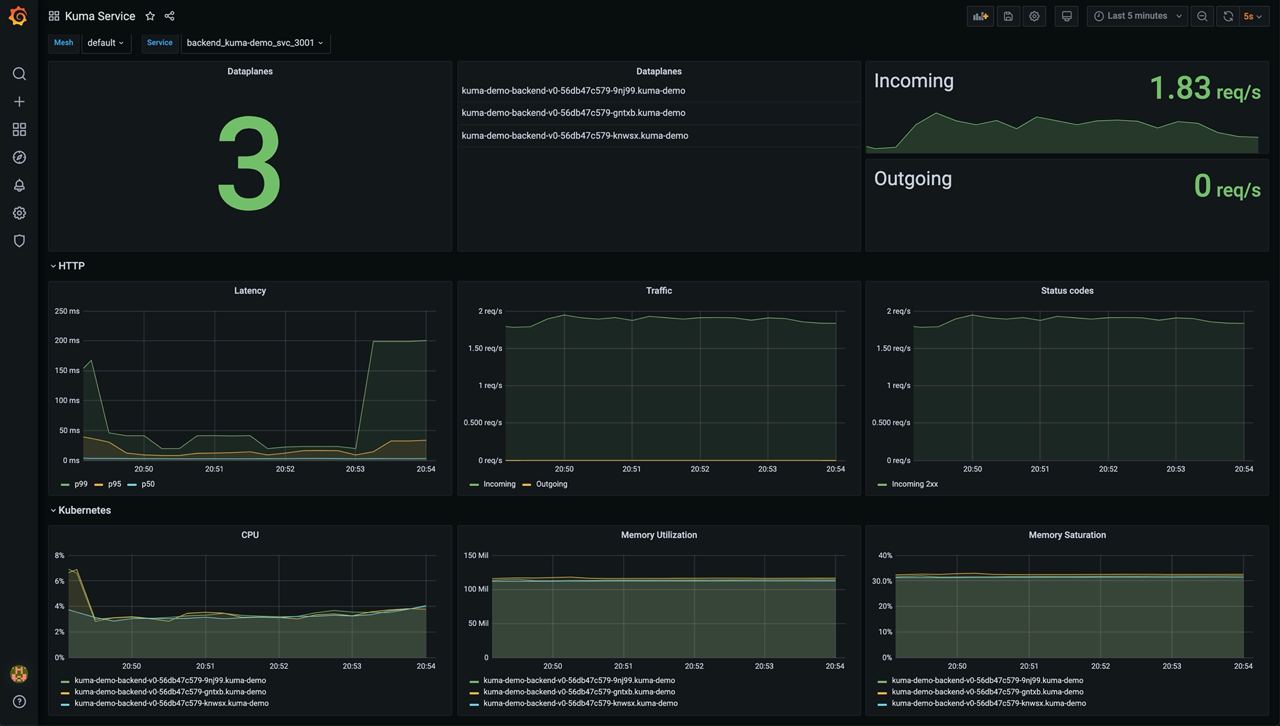

Kong Mesh Dataplane

This dashboard lets you investigate the status of a single dataplane in the mesh. In order to see those metrics, you need to create MeshMetric policy first.

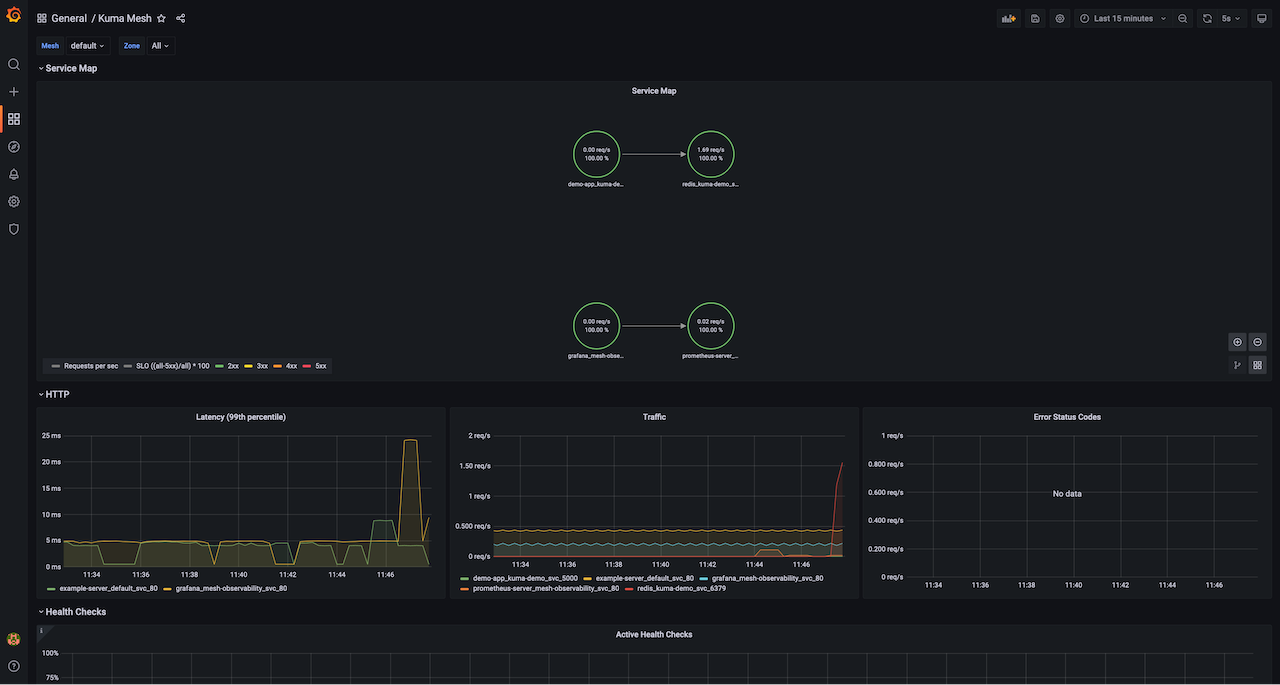

Kong Mesh Mesh

This dashboard lets you investigate the aggregated statistics of a single mesh. It provides a topology view of your service traffic dependencies (Service Map) and includes information such as number of requests and error rates.

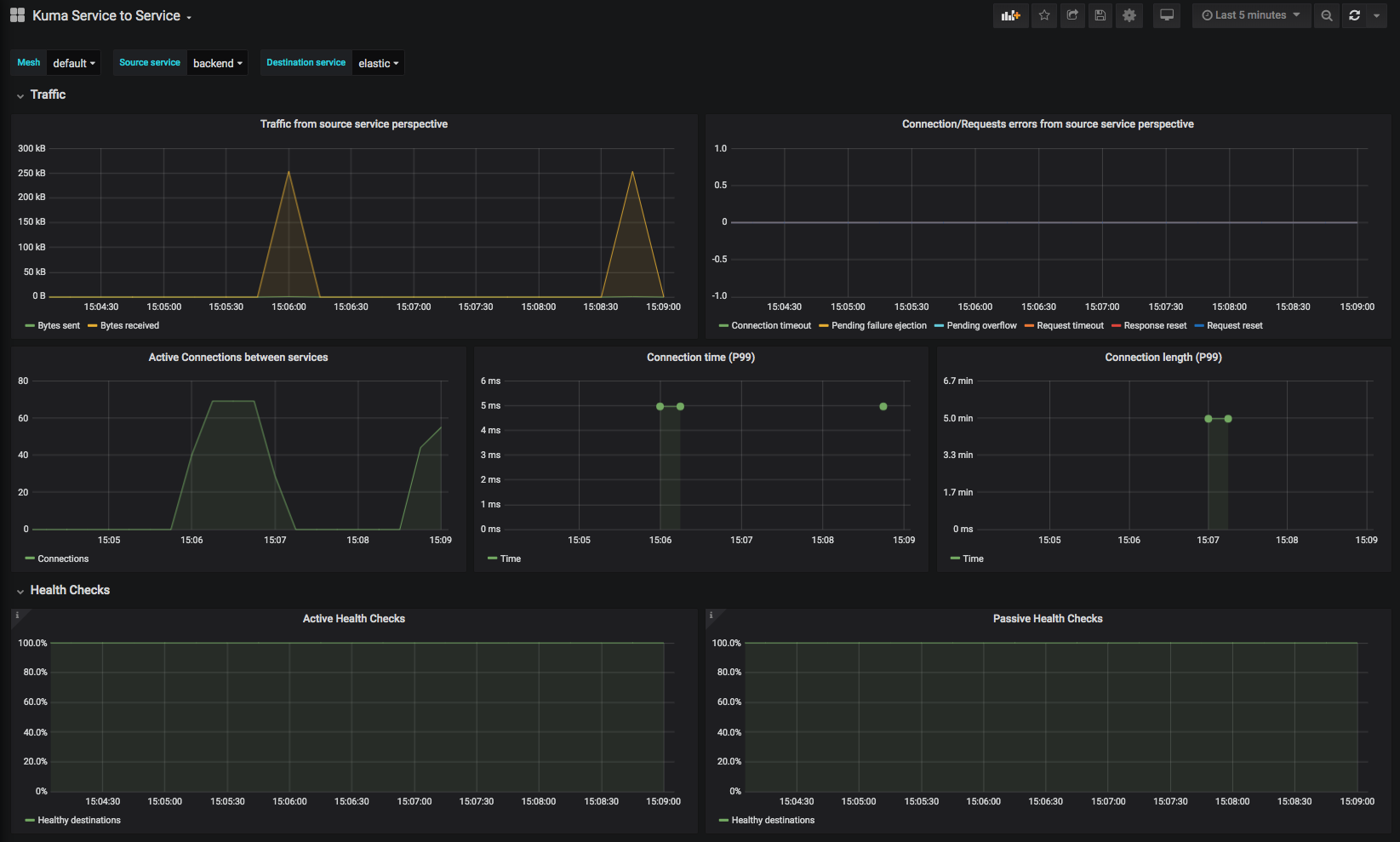

Kong Mesh Service to Service

This dashboard lets you investigate aggregated statistics from dataplanes of specified source services to dataplanes of specified destination service.

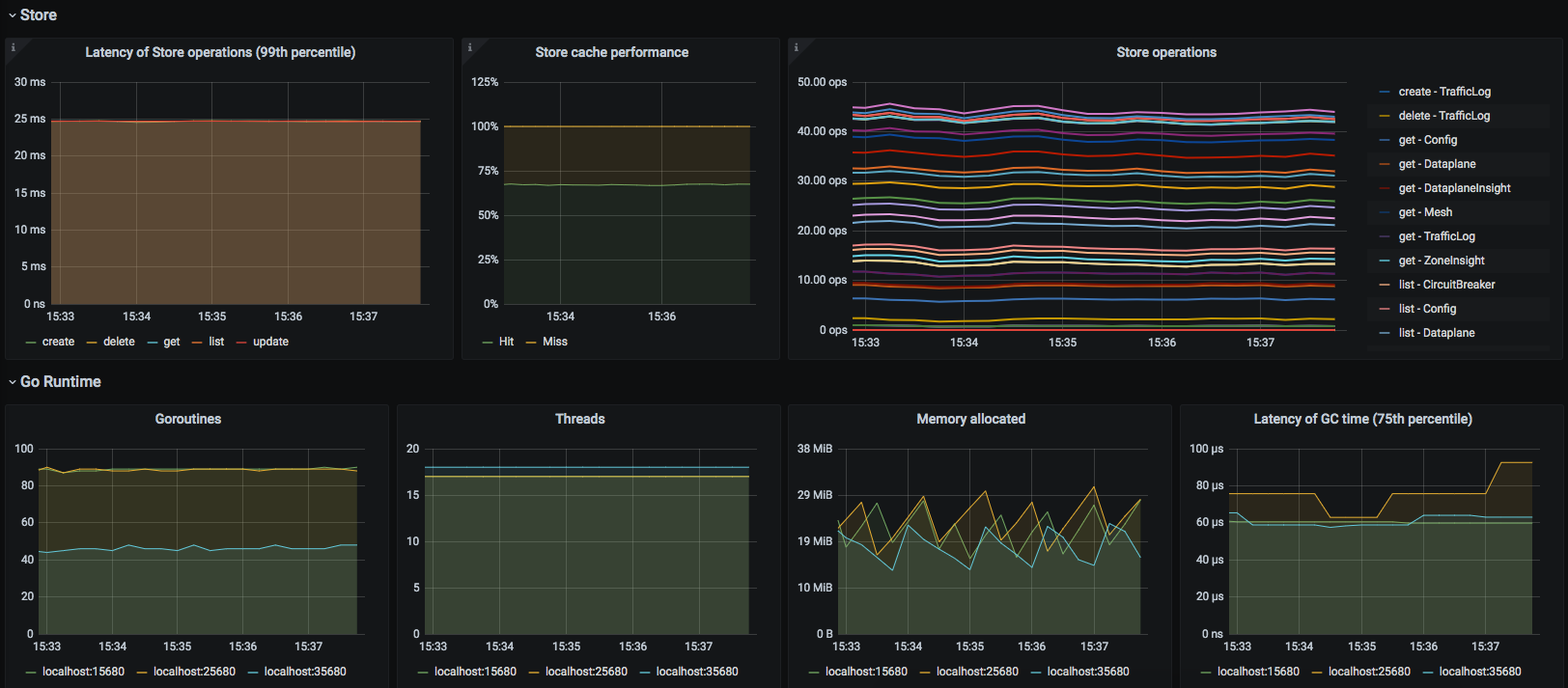

Kong Mesh CP

This dashboard lets you investigate control plane statistics.

Kong Mesh Service

This dashboard lets you investigate aggregated statistics for each service.

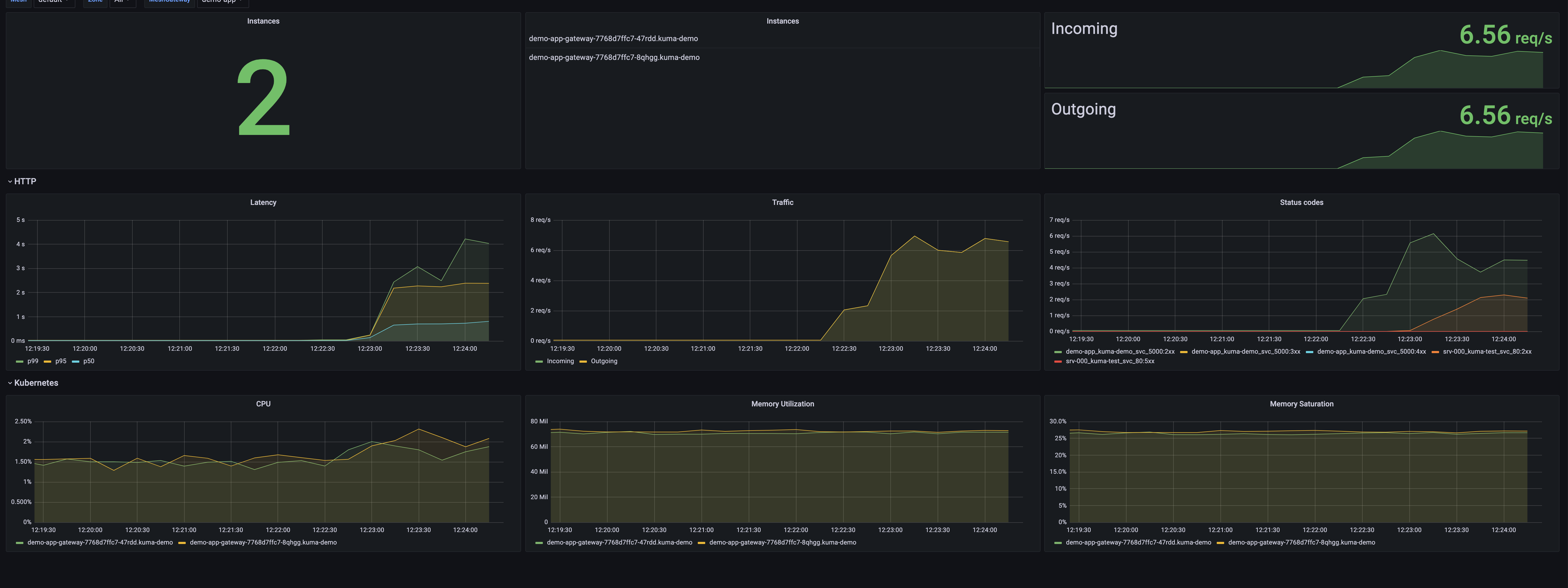

Kong Mesh MeshGateway

This dashboard lets you investigate aggregated statistics for each builtin gateway.

Configuring Datadog

The recommended way to use Datadog is with its agent.

The Datadog agent docs have in-depth installation methods.

Metrics

Kong Mesh exposes metrics with MeshMetric policy in Prometheus format.

You can add annotations to your pods to enable the Datadog agent to scrape metrics.

Please refer to the dedicated documentation.

Tracing

Checkout the

Configure the Datadog agent for APM.

If Datadog is not running on each node you can expose the APM agent port to Kong Mesh via Kubernetes service.

apiVersion: v1

kind: Service

metadata:

name: trace-svc

spec:

selector:

app.kubernetes.io/name: datadog-agent-deployment

ports:

- protocol: TCP

port: 8126

targetPort: 8126

Apply the configuration with kubectl apply -f [..].

Check if the label of the datadog pod installed has not changed (app.kubernetes.io/name: datadog-agent-deployment),

if it did adjust accordingly.

Once the agent is configured to ingest traces you’ll need to configure a MeshTrace policy.

Logs

The best way to have Kong Mesh and Datadog work together is with TCP ingest.

Once your agent is configured with TCP ingest you can configure a MeshAccessLog policy for data plane proxies to send logs.

Observability in multi-zone

Kong Mesh is multi-zone at heart. We explain here how to architect your telemetry stack to accommodate multi-zone.

Prometheus

When Kong Mesh is used in multi-zone the recommended approach is to use 1 Prometheus instance in each zone and to send the metrics of each zone to a global Prometheus instance.

Prometheus offers different ways to do this:

- Federation The global Prometheus will scrape Prometheus in each zone.

- Remote Write Prometheus in each zone will directly write metrics to the global, this is meant to be more efficient than the federation.

- Remote Read like remote write, but the other way around.

Jaeger, Loki, Datadog and others

Most telemetry components don’t have a hierarchical setup like Prometheus. If you want to have a central view of everything you can set up the system in global and have each zone send their data to it. Because zone is present in data plane tags you shouldn’t be worried about metrics, logs, and traces overlapping between zones.